Due to the fascinatingly rapid development of artificial intelligence (AI), demands for regulations in accordance with ethical standards are becoming louder and louder. Most recently, this culminated in a petition - signed by the big names in the tech industry (including Apple co-founder Steve Wozniak and Elon Musk, who himself was an early investor in OpenAI) - calling for the suspension of AI development for at least half a year. Shortly before that, the German Ethics Council published a 300-page study on that very topic: "Man - Machine" and yet doesn't really dare to draw a groundbreaking conclusion, instead referring to case-by-case consideration.

The U.S. is far from regulating AI by law. Parts of Congress are rather busy embarrassing themselves once again with technically unsophisticated questions towards Bytedance or TikTok - and this in front of the eyes of millions of American TikTok users.

Only the EU, with its AI Act, seems to have some kind of plan on how to regulate AI from now on. But what does that actually mean: wanting to regulate AI according to ethical standards and why do we actually have so many ethical concerns about this innovation?

Due to the fascinatingly rapid development of artificial intelligence (AI), demands for regulations in accordance with ethical standards are becoming louder and louder. Most recently, this culminated in a petition - signed by the big names in the tech industry (including Apple co-founder Steve Wozniak and Elon Musk, who himself was an early investor in OpenAI) - calling for the suspension of AI development for at least half a year. Shortly before that, the German Ethics Council published a 300-page study on that very topic: "Man - Machine" and yet doesn't really dare to draw a groundbreaking conclusion, instead referring to case-by-case consideration.

The U.S. is far from regulating AI by law. Parts of Congress are rather busy embarrassing themselves once again with technically unsophisticated questions towards Bytedance or TikTok - and this in front of the eyes of millions of American TikTok users.

Only the EU, with its AI Act, seems to have some kind of plan on how to regulate AI from now on. But what does that actually mean: wanting to regulate AI according to ethical standards and why do we actually have so many ethical concerns about this innovation?

Due to the fascinatingly rapid development of artificial intelligence (AI), demands for regulations in accordance with ethical standards are becoming louder and louder. Most recently, this culminated in a petition - signed by the big names in the tech industry (including Apple co-founder Steve Wozniak and Elon Musk, who himself was an early investor in OpenAI) - calling for the suspension of AI development for at least half a year. Shortly before that, the German Ethics Council published a 300-page study on that very topic: "Man - Machine" and yet doesn't really dare to draw a groundbreaking conclusion, instead referring to case-by-case consideration.

The U.S. is far from regulating AI by law. Parts of Congress are rather busy embarrassing themselves once again with technically unsophisticated questions towards Bytedance or TikTok - and this in front of the eyes of millions of American TikTok users.

Only the EU, with its AI Act, seems to have some kind of plan on how to regulate AI from now on. But what does that actually mean: wanting to regulate AI according to ethical standards and why do we actually have so many ethical concerns about this innovation?

Since the market launch of ChatGPT, AI has been a big topic of conversation. Business, politics and society are rightly discussing the technology, which Bill Gates now calls the most groundbreaking invention since the PC and the Internet in his "Gates Notes" under the title "The Age of AI Has Begun." That Bill Gates is a fan of AI comes as no surprise. After all, Microsoft is heavily invested in OpenAI, making it the big beneficiary of this development. Chat-GPT reached the 1 million user mark in its first week, breaking all records.

By comparison, it took Spotify 5 months, Facebook 10 months, and Netflix 3.5 years. By 2025, the global AI market is expected to reach $60 billion while generating $2.9 trillion in economic growth.

In summary, the pace of AI advancements has never been faster, and the results have never been better.

Since the market launch of ChatGPT, AI has been a big topic of conversation. Business, politics and society are rightly discussing the technology, which Bill Gates now calls the most groundbreaking invention since the PC and the Internet in his "Gates Notes" under the title "The Age of AI Has Begun." That Bill Gates is a fan of AI comes as no surprise. After all, Microsoft is heavily invested in OpenAI, making it the big beneficiary of this development. Chat-GPT reached the 1 million user mark in its first week, breaking all records.

By comparison, it took Spotify 5 months, Facebook 10 months, and Netflix 3.5 years. By 2025, the global AI market is expected to reach $60 billion while generating $2.9 trillion in economic growth.

In summary, the pace of AI advancements has never been faster, and the results have never been better.

Since the market launch of ChatGPT, AI has been a big topic of conversation. Business, politics and society are rightly discussing the technology, which Bill Gates now calls the most groundbreaking invention since the PC and the Internet in his "Gates Notes" under the title "The Age of AI Has Begun." That Bill Gates is a fan of AI comes as no surprise. After all, Microsoft is heavily invested in OpenAI, making it the big beneficiary of this development. Chat-GPT reached the 1 million user mark in its first week, breaking all records.

By comparison, it took Spotify 5 months, Facebook 10 months, and Netflix 3.5 years. By 2025, the global AI market is expected to reach $60 billion while generating $2.9 trillion in economic growth.

In summary, the pace of AI advancements has never been faster, and the results have never been better.

Since the market launch of ChatGPT, AI has been a big topic of conversation. Business, politics and society are rightly discussing the technology, which Bill Gates now calls the most groundbreaking invention since the PC and the Internet in his "Gates Notes" under the title "The Age of AI Has Begun." That Bill Gates is a fan of AI comes as no surprise. After all, Microsoft is heavily invested in OpenAI, making it the big beneficiary of this development. Chat-GPT reached the 1 million user mark in its first week, breaking all records.

By comparison, it took Spotify 5 months, Facebook 10 months, and Netflix 3.5 years. By 2025, the global AI market is expected to reach $60 billion while generating $2.9 trillion in economic growth.

In summary, the pace of AI advancements has never been faster, and the results have never been better.

Since the market launch of ChatGPT, AI has been a big topic of conversation. Business, politics and society are rightly discussing the technology, which Bill Gates now calls the most groundbreaking invention since the PC and the Internet in his "Gates Notes" under the title "The Age of AI Has Begun." That Bill Gates is a fan of AI comes as no surprise. After all, Microsoft is heavily invested in OpenAI, making it the big beneficiary of this development. Chat-GPT reached the 1 million user mark in its first week, breaking all records.

By comparison, it took Spotify 5 months, Facebook 10 months, and Netflix 3.5 years. By 2025, the global AI market is expected to reach $60 billion while generating $2.9 trillion in economic growth.

In summary, the pace of AI advancements has never been faster, and the results have never been better.

Of course, such innovations that take place in public, are fast and affect people, are not only celebrated. Particularly in the case of artificial intelligence, there are major critics and also fears within society.

AI is sometimes even perceived as a threat. 35% of Americans would never want to buy a self-driving car. 60% of consumers would have reservations about taking out insurance via a chatbot.

Are we watching an innovation that no one wants in the end? According to the Economic Forum, the use of AI will make a total of 85 million jobs redundant. Are these the fears of industry outsiders and cultural pessimists?

Criticism of AI has reached a new dimension since March 22, 2023: an open letter from tech billionaire Elon Musk, in which he calls for a halt to the development of artificial intelligence for at least six months, has already found hundreds of well-known supporters and 27,000 others. Among them Apple co-founder Steve Wozniak, Yoshua Bengio, who was once awarded the Turing Award for his research on artificial neural networks and deep learning, as well as other entrepreneurs and scientists from the AI industry.

In the letter, Musk writes almost dramatically that the "dangerous race must be stopped," urging AI labs to "immediately pause training of AI systems more powerful than GPT-4 for at least six months. This pause should be public, verifiable, and include all key stakeholders."

The crux of the criticism, he said, is the so-called black-box models, where even the developers can no longer understand how the systems arrive at their results. Compared to this rather sober demand, the letter is also quite polemical and paints a dystopian future in places. For example, at one point it says, "Should we develop non-human intelligences that could someday outnumber us, outsmart us, make us obsolete, and replace us? Should we risk the loss of control over our civilization?"

The letter offers further criticism, particularly of AI systems that can now generate deceptively real photos. For example, it says, "Today's AI systems are becoming more and more competitive for humans in general tasks, and we must ask ourselves: should we allow machines to flood our information channels with propaganda and falsehoods? Should we automate all jobs, even fulfilling ones?"

The open letter in general is quite an attack on AI research, but in particular on the OpenAI company. How to classify this letter and what the actual motivation behind it is remains controversial. Elon Musk himself was a co-founder of the originally non-profit company OpenAI.

According to a report by the news website Semafor, Musk tried to take over the company, but after failing with it, he dropped out. OpenAI is currently the undisputed market leader, the letter could also just be a hope to buy some time in this fierce race among AI companies. After all, Musk is probably planning to launch a competing AI himself.

The open letter, which stirs up already existing fears of contact with technical innovation, could therefore be pursuing hidden economic self-interest. But even if not, the letter is generally quite polemical and, with regard to the many entrepreneurs and scientists from the AI industry, also not very in-depth as far as the technical aspects are concerned, which is also what the CEO of OpenAI, Sven Altman, criticizes.

Unquestionably, it makes sense to take a closer look at a technology that is so promising and widely applicable. As a society, then, we should consider how to harness the emerging AI so that, in the end, humans are the sole beneficiaries of the innovation. And as with every major innovation before it , fundamental ethical questions will arise. But how should AI be classified from an ethical perspective?

Of course, such innovations that take place in public, are fast and affect people, are not only celebrated. Particularly in the case of artificial intelligence, there are major critics and also fears within society.

AI is sometimes even perceived as a threat. 35% of Americans would never want to buy a self-driving car. 60% of consumers would have reservations about taking out insurance via a chatbot.

Are we watching an innovation that no one wants in the end? According to the Economic Forum, the use of AI will make a total of 85 million jobs redundant. Are these the fears of industry outsiders and cultural pessimists?

Criticism of AI has reached a new dimension since March 22, 2023: an open letter from tech billionaire Elon Musk, in which he calls for a halt to the development of artificial intelligence for at least six months, has already found hundreds of well-known supporters and 27,000 others. Among them Apple co-founder Steve Wozniak, Yoshua Bengio, who was once awarded the Turing Award for his research on artificial neural networks and deep learning, as well as other entrepreneurs and scientists from the AI industry.

In the letter, Musk writes almost dramatically that the "dangerous race must be stopped," urging AI labs to "immediately pause training of AI systems more powerful than GPT-4 for at least six months. This pause should be public, verifiable, and include all key stakeholders."

The crux of the criticism, he said, is the so-called black-box models, where even the developers can no longer understand how the systems arrive at their results. Compared to this rather sober demand, the letter is also quite polemical and paints a dystopian future in places. For example, at one point it says, "Should we develop non-human intelligences that could someday outnumber us, outsmart us, make us obsolete, and replace us? Should we risk the loss of control over our civilization?"

The letter offers further criticism, particularly of AI systems that can now generate deceptively real photos. For example, it says, "Today's AI systems are becoming more and more competitive for humans in general tasks, and we must ask ourselves: should we allow machines to flood our information channels with propaganda and falsehoods? Should we automate all jobs, even fulfilling ones?"

The open letter in general is quite an attack on AI research, but in particular on the OpenAI company. How to classify this letter and what the actual motivation behind it is remains controversial. Elon Musk himself was a co-founder of the originally non-profit company OpenAI.

According to a report by the news website Semafor, Musk tried to take over the company, but after failing with it, he dropped out. OpenAI is currently the undisputed market leader, the letter could also just be a hope to buy some time in this fierce race among AI companies. After all, Musk is probably planning to launch a competing AI himself.

The open letter, which stirs up already existing fears of contact with technical innovation, could therefore be pursuing hidden economic self-interest. But even if not, the letter is generally quite polemical and, with regard to the many entrepreneurs and scientists from the AI industry, also not very in-depth as far as the technical aspects are concerned, which is also what the CEO of OpenAI, Sven Altman, criticizes.

Unquestionably, it makes sense to take a closer look at a technology that is so promising and widely applicable. As a society, then, we should consider how to harness the emerging AI so that, in the end, humans are the sole beneficiaries of the innovation. And as with every major innovation before it , fundamental ethical questions will arise. But how should AI be classified from an ethical perspective?

Of course, such innovations that take place in public, are fast and affect people, are not only celebrated. Particularly in the case of artificial intelligence, there are major critics and also fears within society.

AI is sometimes even perceived as a threat. 35% of Americans would never want to buy a self-driving car. 60% of consumers would have reservations about taking out insurance via a chatbot.

Are we watching an innovation that no one wants in the end? According to the Economic Forum, the use of AI will make a total of 85 million jobs redundant. Are these the fears of industry outsiders and cultural pessimists?

Criticism of AI has reached a new dimension since March 22, 2023: an open letter from tech billionaire Elon Musk, in which he calls for a halt to the development of artificial intelligence for at least six months, has already found hundreds of well-known supporters and 27,000 others. Among them Apple co-founder Steve Wozniak, Yoshua Bengio, who was once awarded the Turing Award for his research on artificial neural networks and deep learning, as well as other entrepreneurs and scientists from the AI industry.

In the letter, Musk writes almost dramatically that the "dangerous race must be stopped," urging AI labs to "immediately pause training of AI systems more powerful than GPT-4 for at least six months. This pause should be public, verifiable, and include all key stakeholders."

The crux of the criticism, he said, is the so-called black-box models, where even the developers can no longer understand how the systems arrive at their results. Compared to this rather sober demand, the letter is also quite polemical and paints a dystopian future in places. For example, at one point it says, "Should we develop non-human intelligences that could someday outnumber us, outsmart us, make us obsolete, and replace us? Should we risk the loss of control over our civilization?"

The letter offers further criticism, particularly of AI systems that can now generate deceptively real photos. For example, it says, "Today's AI systems are becoming more and more competitive for humans in general tasks, and we must ask ourselves: should we allow machines to flood our information channels with propaganda and falsehoods? Should we automate all jobs, even fulfilling ones?"

The open letter in general is quite an attack on AI research, but in particular on the OpenAI company. How to classify this letter and what the actual motivation behind it is remains controversial. Elon Musk himself was a co-founder of the originally non-profit company OpenAI.

According to a report by the news website Semafor, Musk tried to take over the company, but after failing with it, he dropped out. OpenAI is currently the undisputed market leader, the letter could also just be a hope to buy some time in this fierce race among AI companies. After all, Musk is probably planning to launch a competing AI himself.

The open letter, which stirs up already existing fears of contact with technical innovation, could therefore be pursuing hidden economic self-interest. But even if not, the letter is generally quite polemical and, with regard to the many entrepreneurs and scientists from the AI industry, also not very in-depth as far as the technical aspects are concerned, which is also what the CEO of OpenAI, Sven Altman, criticizes.

Unquestionably, it makes sense to take a closer look at a technology that is so promising and widely applicable. As a society, then, we should consider how to harness the emerging AI so that, in the end, humans are the sole beneficiaries of the innovation. And as with every major innovation before it , fundamental ethical questions will arise. But how should AI be classified from an ethical perspective?

Of course, such innovations that take place in public, are fast and affect people, are not only celebrated. Particularly in the case of artificial intelligence, there are major critics and also fears within society.

AI is sometimes even perceived as a threat. 35% of Americans would never want to buy a self-driving car. 60% of consumers would have reservations about taking out insurance via a chatbot.

Are we watching an innovation that no one wants in the end? According to the Economic Forum, the use of AI will make a total of 85 million jobs redundant. Are these the fears of industry outsiders and cultural pessimists?

Criticism of AI has reached a new dimension since March 22, 2023: an open letter from tech billionaire Elon Musk, in which he calls for a halt to the development of artificial intelligence for at least six months, has already found hundreds of well-known supporters and 27,000 others. Among them Apple co-founder Steve Wozniak, Yoshua Bengio, who was once awarded the Turing Award for his research on artificial neural networks and deep learning, as well as other entrepreneurs and scientists from the AI industry.

In the letter, Musk writes almost dramatically that the "dangerous race must be stopped," urging AI labs to "immediately pause training of AI systems more powerful than GPT-4 for at least six months. This pause should be public, verifiable, and include all key stakeholders."

The crux of the criticism, he said, is the so-called black-box models, where even the developers can no longer understand how the systems arrive at their results. Compared to this rather sober demand, the letter is also quite polemical and paints a dystopian future in places. For example, at one point it says, "Should we develop non-human intelligences that could someday outnumber us, outsmart us, make us obsolete, and replace us? Should we risk the loss of control over our civilization?"

The letter offers further criticism, particularly of AI systems that can now generate deceptively real photos. For example, it says, "Today's AI systems are becoming more and more competitive for humans in general tasks, and we must ask ourselves: should we allow machines to flood our information channels with propaganda and falsehoods? Should we automate all jobs, even fulfilling ones?"

The open letter in general is quite an attack on AI research, but in particular on the OpenAI company. How to classify this letter and what the actual motivation behind it is remains controversial. Elon Musk himself was a co-founder of the originally non-profit company OpenAI.

According to a report by the news website Semafor, Musk tried to take over the company, but after failing with it, he dropped out. OpenAI is currently the undisputed market leader, the letter could also just be a hope to buy some time in this fierce race among AI companies. After all, Musk is probably planning to launch a competing AI himself.

The open letter, which stirs up already existing fears of contact with technical innovation, could therefore be pursuing hidden economic self-interest. But even if not, the letter is generally quite polemical and, with regard to the many entrepreneurs and scientists from the AI industry, also not very in-depth as far as the technical aspects are concerned, which is also what the CEO of OpenAI, Sven Altman, criticizes.

Unquestionably, it makes sense to take a closer look at a technology that is so promising and widely applicable. As a society, then, we should consider how to harness the emerging AI so that, in the end, humans are the sole beneficiaries of the innovation. And as with every major innovation before it , fundamental ethical questions will arise. But how should AI be classified from an ethical perspective?

Of course, such innovations that take place in public, are fast and affect people, are not only celebrated. Particularly in the case of artificial intelligence, there are major critics and also fears within society.

AI is sometimes even perceived as a threat. 35% of Americans would never want to buy a self-driving car. 60% of consumers would have reservations about taking out insurance via a chatbot.

Are we watching an innovation that no one wants in the end? According to the Economic Forum, the use of AI will make a total of 85 million jobs redundant. Are these the fears of industry outsiders and cultural pessimists?

Criticism of AI has reached a new dimension since March 22, 2023: an open letter from tech billionaire Elon Musk, in which he calls for a halt to the development of artificial intelligence for at least six months, has already found hundreds of well-known supporters and 27,000 others. Among them Apple co-founder Steve Wozniak, Yoshua Bengio, who was once awarded the Turing Award for his research on artificial neural networks and deep learning, as well as other entrepreneurs and scientists from the AI industry.

In the letter, Musk writes almost dramatically that the "dangerous race must be stopped," urging AI labs to "immediately pause training of AI systems more powerful than GPT-4 for at least six months. This pause should be public, verifiable, and include all key stakeholders."

The crux of the criticism, he said, is the so-called black-box models, where even the developers can no longer understand how the systems arrive at their results. Compared to this rather sober demand, the letter is also quite polemical and paints a dystopian future in places. For example, at one point it says, "Should we develop non-human intelligences that could someday outnumber us, outsmart us, make us obsolete, and replace us? Should we risk the loss of control over our civilization?"

The letter offers further criticism, particularly of AI systems that can now generate deceptively real photos. For example, it says, "Today's AI systems are becoming more and more competitive for humans in general tasks, and we must ask ourselves: should we allow machines to flood our information channels with propaganda and falsehoods? Should we automate all jobs, even fulfilling ones?"

The open letter in general is quite an attack on AI research, but in particular on the OpenAI company. How to classify this letter and what the actual motivation behind it is remains controversial. Elon Musk himself was a co-founder of the originally non-profit company OpenAI.

According to a report by the news website Semafor, Musk tried to take over the company, but after failing with it, he dropped out. OpenAI is currently the undisputed market leader, the letter could also just be a hope to buy some time in this fierce race among AI companies. After all, Musk is probably planning to launch a competing AI himself.

The open letter, which stirs up already existing fears of contact with technical innovation, could therefore be pursuing hidden economic self-interest. But even if not, the letter is generally quite polemical and, with regard to the many entrepreneurs and scientists from the AI industry, also not very in-depth as far as the technical aspects are concerned, which is also what the CEO of OpenAI, Sven Altman, criticizes.

Unquestionably, it makes sense to take a closer look at a technology that is so promising and widely applicable. As a society, then, we should consider how to harness the emerging AI so that, in the end, humans are the sole beneficiaries of the innovation. And as with every major innovation before it , fundamental ethical questions will arise. But how should AI be classified from an ethical perspective?

In more and more articles, one reads calls for regulations of AI according to ethical standards. In March, the German Ethics Council published a 300-page study on the relationship between humans and machines. But what are ethical standards and how does one integrate AI into our basic ethical concepts? Does AI already possess a moral capacity? Is it even more than just another - admittedly powerful - human tool?

Ethics, derived from the ancient Greek word ḗthos (ἦθος) meaning 'character, nature, custom, custom', is - first introduced as such by Aristotle - a philosophical discipline. It is the doctrine or science that distinguishes between good and evil actions. It fathoms moral facts and evaluates (human) actions in terms of their moral rightness.

Morality refers to all norms (ideals, values, rules or judgments) that govern the actions of people or societies, as well as the reaction with guilt when violating these normative orientations.

After Kant first narrowed the concept of morality to the conscience of the individual, since Hegel a distinction has been made between individual conviction - i.e. morality - and morality, i.e. the rules of a society that are laid down in law and the constitution and are historically and culturally conditioned. It follows from this that ethical standards always require social and historical context. Ethics from our Western point of view differs from ethics from Arabic or Asian point of view.

The major studies regarding ethical thought on AI essentially revolve around the basic question of whether AI is a moral actor at all, and then address individual case scenarios of how to act in which situation if possible. So far, a generally applicable signpost remains absent. So what role does AI play in our ethical construct?

Artificial intelligence describes systems that are able to imitate human abilities such as logical thinking, planning, learning and creativity. AI enables technical programs and systems to perceive parts of their environment, deal with what they perceive, and solve problems to achieve a specific goal.

Data is processed and acted upon. More precisely, there are so-called input data of any origin, which the computer processes on the basis of an ML algorithm (symbolically work order), finally, a so-called output decision is presented, which can be (for example) a result selection, a single result or even an action.

The distinctive feature is undoubtedly the versatility of AI. Whereby not every AI-based system is equally empowered or "intelligent". Depending on the area of application, AI is capable of more or less.

Fundamentally, a distinction can be made between weak and strong AI systems. The goal of strong AI is to achieve human-like or -exceeding capabilities. In this vision, it should possess human, cognitive abilities and even emotions. Furthermore, one differentiates between narrow and broad AI, depending on how large the "field of action" of the AI is. For example, narrow AI operates only on one domain, while broad AI has extensive access to data sources. The distinction between narrow and broad AI is thus quantitative in nature, and between weak and strong AI qualitative. Considerations on how to create a socially acceptable framework for AI must be made with regard to the characteristics of AI. Starting with considerations about which data sources they are allowed to access and process, through to the degree of decision-making and action power that they are granted and how this is then shaped in detail.

According to psychology, human intelligence, on the other hand, is first and foremost a constructed, social construct that has different assessment emphases depending on the cultural circle. Researchers disagree on the question of whether intelligence is to be regarded as a single ability or as the product of several individual abilities.

Human intelligence is described as understanding, judging and reasoning or rational thinking, goal-oriented action and effective interaction with the environment. Basically, however, it can only be observed indirectly. We measure human intelligence through constructed situations in which we evaluate the ability to find solutions (IQ tests).

The role of creativity in intelligence must also be considered in terms of comparability between human and artificial intelligence. A distinction is made between convergent and divergent thinking. When we think convergent we look for the one - best - solutions in intelligence tests and when we think divergent we look for multiple imaginative solutions.

Furthermore, more and more is now attributed to the concept of intelligence, for example social and emotional intelligence or the terms embodied, embedded, enactive and extended cognition, they are applied in psychological and philosophical assessment in robotics and evaluate the role of the body and the environment of robotic systems.

Accordingly, it is questionable to what extent the concept of intelligence can be applied to artificial intelligence at all and whether it should not rather be interpreted as a metaphorical term.

Alternatively, the concept of reason could encompass the capabilities of AI and thus offer recommendations for action. We have known the concept of reason longer than that of intelligence, whereby intelligence is a component of reason.

Reason (Latin ratio, "calculation, computation") is a type of thinking that allows the human mind to organize its references to reality. It is basically divided into theoretical and practical reasons. While theoretical reason, which relates to gaining knowledge, still has certain parallels to AI systems, practical reason, which aims at coherent, responsible action to enable a good life, is already no longer applicable.

At least the AI- systems available so far "do not have the relevant capabilities of sense comprehension, intentionality and reference to an extra-linguistic reality".

Neither the concept of intelligence nor the concept of reason can be applied to artificial intelligence at present. An intelligence or reason - equal to the human one - is therefore not present. However, could the moral capacity of AI itself be derived from other circumstances?

In order to determine the existence of moral capability, the concept of responsibility must also be considered. It must be clarified which abilities or characteristics must be present in order to be a moral actor. For only a moral agent can have moral responsibility and thereby have person status. In the doctrine of ethics, persons are moral actors, but not all humans.

Whether AI is a person in the sense of moral capacity can be determined by Daniel Dennett's "theory of the person." Although humans are the inevitable starting point for determining the status of persons, Dennett does not limit the status of "person" to humans alone, which seems reasonable with regard to the evaluation of non-human actors.

Dennett uses three criteria to determine personhood:

Intentionality is the subjective act of consciousness directed toward an object. In other words, it circumscribes the feeling perception of objects/persons. First level intentionality means that one perceives only oneself. As soon as one perceives other actors, second level intentionality is reached and if one now assumes that the counterpart perceived as an actor also perceives oneself in turn as an actor, the third level of intentionality is reached.

Linguistic ability, in turn, is the ability to communicate verbally. In principle, this means that per se no non-human actors come into question. However, AI has now reached the point where, thanks to NLP and speech synthesis, it has mastered precisely this verbal communication and has a speech capability.

According to Dennett, self-consciousness is the conscious being, i.e. the perception of oneself as a living being and the possibility to think about oneself.

In addition, free will can be used as a criterion for moral ability. Because who does not make free decisions, can also not have to justify itself for the actions. Free will is almost similar to the intentionality of Dennett's third stage. For he who has no mental state of self-awareness, consequently cannot be able to do anything. According to Aristotle, he is free to do what he wants without external or internal constraints. Even singularity incidents in which the AI supposedly makes its own decisions would not affirm free will insofar as it is still subject to programmed objectives, be it optimization.

AI systems are thus (so far) incapable of being moral agents even according to person theory, and thus one cannot confer moral rights or duties on them.

In more and more articles, one reads calls for regulations of AI according to ethical standards. In March, the German Ethics Council published a 300-page study on the relationship between humans and machines. But what are ethical standards and how does one integrate AI into our basic ethical concepts? Does AI already possess a moral capacity? Is it even more than just another - admittedly powerful - human tool?

Ethics, derived from the ancient Greek word ḗthos (ἦθος) meaning 'character, nature, custom, custom', is - first introduced as such by Aristotle - a philosophical discipline. It is the doctrine or science that distinguishes between good and evil actions. It fathoms moral facts and evaluates (human) actions in terms of their moral rightness.

Morality refers to all norms (ideals, values, rules or judgments) that govern the actions of people or societies, as well as the reaction with guilt when violating these normative orientations.

After Kant first narrowed the concept of morality to the conscience of the individual, since Hegel a distinction has been made between individual conviction - i.e. morality - and morality, i.e. the rules of a society that are laid down in law and the constitution and are historically and culturally conditioned. It follows from this that ethical standards always require social and historical context. Ethics from our Western point of view differs from ethics from Arabic or Asian point of view.

The major studies regarding ethical thought on AI essentially revolve around the basic question of whether AI is a moral actor at all, and then address individual case scenarios of how to act in which situation if possible. So far, a generally applicable signpost remains absent. So what role does AI play in our ethical construct?

Artificial intelligence describes systems that are able to imitate human abilities such as logical thinking, planning, learning and creativity. AI enables technical programs and systems to perceive parts of their environment, deal with what they perceive, and solve problems to achieve a specific goal.

Data is processed and acted upon. More precisely, there are so-called input data of any origin, which the computer processes on the basis of an ML algorithm (symbolically work order), finally, a so-called output decision is presented, which can be (for example) a result selection, a single result or even an action.

The distinctive feature is undoubtedly the versatility of AI. Whereby not every AI-based system is equally empowered or "intelligent". Depending on the area of application, AI is capable of more or less.

Fundamentally, a distinction can be made between weak and strong AI systems. The goal of strong AI is to achieve human-like or -exceeding capabilities. In this vision, it should possess human, cognitive abilities and even emotions. Furthermore, one differentiates between narrow and broad AI, depending on how large the "field of action" of the AI is. For example, narrow AI operates only on one domain, while broad AI has extensive access to data sources. The distinction between narrow and broad AI is thus quantitative in nature, and between weak and strong AI qualitative. Considerations on how to create a socially acceptable framework for AI must be made with regard to the characteristics of AI. Starting with considerations about which data sources they are allowed to access and process, through to the degree of decision-making and action power that they are granted and how this is then shaped in detail.

According to psychology, human intelligence, on the other hand, is first and foremost a constructed, social construct that has different assessment emphases depending on the cultural circle. Researchers disagree on the question of whether intelligence is to be regarded as a single ability or as the product of several individual abilities.

Human intelligence is described as understanding, judging and reasoning or rational thinking, goal-oriented action and effective interaction with the environment. Basically, however, it can only be observed indirectly. We measure human intelligence through constructed situations in which we evaluate the ability to find solutions (IQ tests).

The role of creativity in intelligence must also be considered in terms of comparability between human and artificial intelligence. A distinction is made between convergent and divergent thinking. When we think convergent we look for the one - best - solutions in intelligence tests and when we think divergent we look for multiple imaginative solutions.

Furthermore, more and more is now attributed to the concept of intelligence, for example social and emotional intelligence or the terms embodied, embedded, enactive and extended cognition, they are applied in psychological and philosophical assessment in robotics and evaluate the role of the body and the environment of robotic systems.

Accordingly, it is questionable to what extent the concept of intelligence can be applied to artificial intelligence at all and whether it should not rather be interpreted as a metaphorical term.

Alternatively, the concept of reason could encompass the capabilities of AI and thus offer recommendations for action. We have known the concept of reason longer than that of intelligence, whereby intelligence is a component of reason.

Reason (Latin ratio, "calculation, computation") is a type of thinking that allows the human mind to organize its references to reality. It is basically divided into theoretical and practical reasons. While theoretical reason, which relates to gaining knowledge, still has certain parallels to AI systems, practical reason, which aims at coherent, responsible action to enable a good life, is already no longer applicable.

At least the AI- systems available so far "do not have the relevant capabilities of sense comprehension, intentionality and reference to an extra-linguistic reality".

Neither the concept of intelligence nor the concept of reason can be applied to artificial intelligence at present. An intelligence or reason - equal to the human one - is therefore not present. However, could the moral capacity of AI itself be derived from other circumstances?

In order to determine the existence of moral capability, the concept of responsibility must also be considered. It must be clarified which abilities or characteristics must be present in order to be a moral actor. For only a moral agent can have moral responsibility and thereby have person status. In the doctrine of ethics, persons are moral actors, but not all humans.

Whether AI is a person in the sense of moral capacity can be determined by Daniel Dennett's "theory of the person." Although humans are the inevitable starting point for determining the status of persons, Dennett does not limit the status of "person" to humans alone, which seems reasonable with regard to the evaluation of non-human actors.

Dennett uses three criteria to determine personhood:

Intentionality is the subjective act of consciousness directed toward an object. In other words, it circumscribes the feeling perception of objects/persons. First level intentionality means that one perceives only oneself. As soon as one perceives other actors, second level intentionality is reached and if one now assumes that the counterpart perceived as an actor also perceives oneself in turn as an actor, the third level of intentionality is reached.

Linguistic ability, in turn, is the ability to communicate verbally. In principle, this means that per se no non-human actors come into question. However, AI has now reached the point where, thanks to NLP and speech synthesis, it has mastered precisely this verbal communication and has a speech capability.

According to Dennett, self-consciousness is the conscious being, i.e. the perception of oneself as a living being and the possibility to think about oneself.

In addition, free will can be used as a criterion for moral ability. Because who does not make free decisions, can also not have to justify itself for the actions. Free will is almost similar to the intentionality of Dennett's third stage. For he who has no mental state of self-awareness, consequently cannot be able to do anything. According to Aristotle, he is free to do what he wants without external or internal constraints. Even singularity incidents in which the AI supposedly makes its own decisions would not affirm free will insofar as it is still subject to programmed objectives, be it optimization.

AI systems are thus (so far) incapable of being moral agents even according to person theory, and thus one cannot confer moral rights or duties on them.

In more and more articles, one reads calls for regulations of AI according to ethical standards. In March, the German Ethics Council published a 300-page study on the relationship between humans and machines. But what are ethical standards and how does one integrate AI into our basic ethical concepts? Does AI already possess a moral capacity? Is it even more than just another - admittedly powerful - human tool?

Ethics, derived from the ancient Greek word ḗthos (ἦθος) meaning 'character, nature, custom, custom', is - first introduced as such by Aristotle - a philosophical discipline. It is the doctrine or science that distinguishes between good and evil actions. It fathoms moral facts and evaluates (human) actions in terms of their moral rightness.

Morality refers to all norms (ideals, values, rules or judgments) that govern the actions of people or societies, as well as the reaction with guilt when violating these normative orientations.

After Kant first narrowed the concept of morality to the conscience of the individual, since Hegel a distinction has been made between individual conviction - i.e. morality - and morality, i.e. the rules of a society that are laid down in law and the constitution and are historically and culturally conditioned. It follows from this that ethical standards always require social and historical context. Ethics from our Western point of view differs from ethics from Arabic or Asian point of view.

The major studies regarding ethical thought on AI essentially revolve around the basic question of whether AI is a moral actor at all, and then address individual case scenarios of how to act in which situation if possible. So far, a generally applicable signpost remains absent. So what role does AI play in our ethical construct?

Artificial intelligence describes systems that are able to imitate human abilities such as logical thinking, planning, learning and creativity. AI enables technical programs and systems to perceive parts of their environment, deal with what they perceive, and solve problems to achieve a specific goal.

Data is processed and acted upon. More precisely, there are so-called input data of any origin, which the computer processes on the basis of an ML algorithm (symbolically work order), finally, a so-called output decision is presented, which can be (for example) a result selection, a single result or even an action.

The distinctive feature is undoubtedly the versatility of AI. Whereby not every AI-based system is equally empowered or "intelligent". Depending on the area of application, AI is capable of more or less.

Fundamentally, a distinction can be made between weak and strong AI systems. The goal of strong AI is to achieve human-like or -exceeding capabilities. In this vision, it should possess human, cognitive abilities and even emotions. Furthermore, one differentiates between narrow and broad AI, depending on how large the "field of action" of the AI is. For example, narrow AI operates only on one domain, while broad AI has extensive access to data sources. The distinction between narrow and broad AI is thus quantitative in nature, and between weak and strong AI qualitative. Considerations on how to create a socially acceptable framework for AI must be made with regard to the characteristics of AI. Starting with considerations about which data sources they are allowed to access and process, through to the degree of decision-making and action power that they are granted and how this is then shaped in detail.

According to psychology, human intelligence, on the other hand, is first and foremost a constructed, social construct that has different assessment emphases depending on the cultural circle. Researchers disagree on the question of whether intelligence is to be regarded as a single ability or as the product of several individual abilities.

Human intelligence is described as understanding, judging and reasoning or rational thinking, goal-oriented action and effective interaction with the environment. Basically, however, it can only be observed indirectly. We measure human intelligence through constructed situations in which we evaluate the ability to find solutions (IQ tests).

The role of creativity in intelligence must also be considered in terms of comparability between human and artificial intelligence. A distinction is made between convergent and divergent thinking. When we think convergent we look for the one - best - solutions in intelligence tests and when we think divergent we look for multiple imaginative solutions.

Furthermore, more and more is now attributed to the concept of intelligence, for example social and emotional intelligence or the terms embodied, embedded, enactive and extended cognition, they are applied in psychological and philosophical assessment in robotics and evaluate the role of the body and the environment of robotic systems.

Accordingly, it is questionable to what extent the concept of intelligence can be applied to artificial intelligence at all and whether it should not rather be interpreted as a metaphorical term.

Alternatively, the concept of reason could encompass the capabilities of AI and thus offer recommendations for action. We have known the concept of reason longer than that of intelligence, whereby intelligence is a component of reason.

Reason (Latin ratio, "calculation, computation") is a type of thinking that allows the human mind to organize its references to reality. It is basically divided into theoretical and practical reasons. While theoretical reason, which relates to gaining knowledge, still has certain parallels to AI systems, practical reason, which aims at coherent, responsible action to enable a good life, is already no longer applicable.

At least the AI- systems available so far "do not have the relevant capabilities of sense comprehension, intentionality and reference to an extra-linguistic reality".

Neither the concept of intelligence nor the concept of reason can be applied to artificial intelligence at present. An intelligence or reason - equal to the human one - is therefore not present. However, could the moral capacity of AI itself be derived from other circumstances?

In order to determine the existence of moral capability, the concept of responsibility must also be considered. It must be clarified which abilities or characteristics must be present in order to be a moral actor. For only a moral agent can have moral responsibility and thereby have person status. In the doctrine of ethics, persons are moral actors, but not all humans.

Whether AI is a person in the sense of moral capacity can be determined by Daniel Dennett's "theory of the person." Although humans are the inevitable starting point for determining the status of persons, Dennett does not limit the status of "person" to humans alone, which seems reasonable with regard to the evaluation of non-human actors.

Dennett uses three criteria to determine personhood:

Intentionality is the subjective act of consciousness directed toward an object. In other words, it circumscribes the feeling perception of objects/persons. First level intentionality means that one perceives only oneself. As soon as one perceives other actors, second level intentionality is reached and if one now assumes that the counterpart perceived as an actor also perceives oneself in turn as an actor, the third level of intentionality is reached.

Linguistic ability, in turn, is the ability to communicate verbally. In principle, this means that per se no non-human actors come into question. However, AI has now reached the point where, thanks to NLP and speech synthesis, it has mastered precisely this verbal communication and has a speech capability.

According to Dennett, self-consciousness is the conscious being, i.e. the perception of oneself as a living being and the possibility to think about oneself.

In addition, free will can be used as a criterion for moral ability. Because who does not make free decisions, can also not have to justify itself for the actions. Free will is almost similar to the intentionality of Dennett's third stage. For he who has no mental state of self-awareness, consequently cannot be able to do anything. According to Aristotle, he is free to do what he wants without external or internal constraints. Even singularity incidents in which the AI supposedly makes its own decisions would not affirm free will insofar as it is still subject to programmed objectives, be it optimization.

AI systems are thus (so far) incapable of being moral agents even according to person theory, and thus one cannot confer moral rights or duties on them.

In more and more articles, one reads calls for regulations of AI according to ethical standards. In March, the German Ethics Council published a 300-page study on the relationship between humans and machines. But what are ethical standards and how does one integrate AI into our basic ethical concepts? Does AI already possess a moral capacity? Is it even more than just another - admittedly powerful - human tool?

Ethics, derived from the ancient Greek word ḗthos (ἦθος) meaning 'character, nature, custom, custom', is - first introduced as such by Aristotle - a philosophical discipline. It is the doctrine or science that distinguishes between good and evil actions. It fathoms moral facts and evaluates (human) actions in terms of their moral rightness.

Morality refers to all norms (ideals, values, rules or judgments) that govern the actions of people or societies, as well as the reaction with guilt when violating these normative orientations.

After Kant first narrowed the concept of morality to the conscience of the individual, since Hegel a distinction has been made between individual conviction - i.e. morality - and morality, i.e. the rules of a society that are laid down in law and the constitution and are historically and culturally conditioned. It follows from this that ethical standards always require social and historical context. Ethics from our Western point of view differs from ethics from Arabic or Asian point of view.

The major studies regarding ethical thought on AI essentially revolve around the basic question of whether AI is a moral actor at all, and then address individual case scenarios of how to act in which situation if possible. So far, a generally applicable signpost remains absent. So what role does AI play in our ethical construct?

Artificial intelligence describes systems that are able to imitate human abilities such as logical thinking, planning, learning and creativity. AI enables technical programs and systems to perceive parts of their environment, deal with what they perceive, and solve problems to achieve a specific goal.

Data is processed and acted upon. More precisely, there are so-called input data of any origin, which the computer processes on the basis of an ML algorithm (symbolically work order), finally, a so-called output decision is presented, which can be (for example) a result selection, a single result or even an action.

The distinctive feature is undoubtedly the versatility of AI. Whereby not every AI-based system is equally empowered or "intelligent". Depending on the area of application, AI is capable of more or less.

Fundamentally, a distinction can be made between weak and strong AI systems. The goal of strong AI is to achieve human-like or -exceeding capabilities. In this vision, it should possess human, cognitive abilities and even emotions. Furthermore, one differentiates between narrow and broad AI, depending on how large the "field of action" of the AI is. For example, narrow AI operates only on one domain, while broad AI has extensive access to data sources. The distinction between narrow and broad AI is thus quantitative in nature, and between weak and strong AI qualitative. Considerations on how to create a socially acceptable framework for AI must be made with regard to the characteristics of AI. Starting with considerations about which data sources they are allowed to access and process, through to the degree of decision-making and action power that they are granted and how this is then shaped in detail.

According to psychology, human intelligence, on the other hand, is first and foremost a constructed, social construct that has different assessment emphases depending on the cultural circle. Researchers disagree on the question of whether intelligence is to be regarded as a single ability or as the product of several individual abilities.

Human intelligence is described as understanding, judging and reasoning or rational thinking, goal-oriented action and effective interaction with the environment. Basically, however, it can only be observed indirectly. We measure human intelligence through constructed situations in which we evaluate the ability to find solutions (IQ tests).

The role of creativity in intelligence must also be considered in terms of comparability between human and artificial intelligence. A distinction is made between convergent and divergent thinking. When we think convergent we look for the one - best - solutions in intelligence tests and when we think divergent we look for multiple imaginative solutions.

Furthermore, more and more is now attributed to the concept of intelligence, for example social and emotional intelligence or the terms embodied, embedded, enactive and extended cognition, they are applied in psychological and philosophical assessment in robotics and evaluate the role of the body and the environment of robotic systems.

Accordingly, it is questionable to what extent the concept of intelligence can be applied to artificial intelligence at all and whether it should not rather be interpreted as a metaphorical term.

Alternatively, the concept of reason could encompass the capabilities of AI and thus offer recommendations for action. We have known the concept of reason longer than that of intelligence, whereby intelligence is a component of reason.

Reason (Latin ratio, "calculation, computation") is a type of thinking that allows the human mind to organize its references to reality. It is basically divided into theoretical and practical reasons. While theoretical reason, which relates to gaining knowledge, still has certain parallels to AI systems, practical reason, which aims at coherent, responsible action to enable a good life, is already no longer applicable.

At least the AI- systems available so far "do not have the relevant capabilities of sense comprehension, intentionality and reference to an extra-linguistic reality".

Neither the concept of intelligence nor the concept of reason can be applied to artificial intelligence at present. An intelligence or reason - equal to the human one - is therefore not present. However, could the moral capacity of AI itself be derived from other circumstances?

In order to determine the existence of moral capability, the concept of responsibility must also be considered. It must be clarified which abilities or characteristics must be present in order to be a moral actor. For only a moral agent can have moral responsibility and thereby have person status. In the doctrine of ethics, persons are moral actors, but not all humans.

Whether AI is a person in the sense of moral capacity can be determined by Daniel Dennett's "theory of the person." Although humans are the inevitable starting point for determining the status of persons, Dennett does not limit the status of "person" to humans alone, which seems reasonable with regard to the evaluation of non-human actors.

Dennett uses three criteria to determine personhood:

Intentionality is the subjective act of consciousness directed toward an object. In other words, it circumscribes the feeling perception of objects/persons. First level intentionality means that one perceives only oneself. As soon as one perceives other actors, second level intentionality is reached and if one now assumes that the counterpart perceived as an actor also perceives oneself in turn as an actor, the third level of intentionality is reached.

Linguistic ability, in turn, is the ability to communicate verbally. In principle, this means that per se no non-human actors come into question. However, AI has now reached the point where, thanks to NLP and speech synthesis, it has mastered precisely this verbal communication and has a speech capability.

According to Dennett, self-consciousness is the conscious being, i.e. the perception of oneself as a living being and the possibility to think about oneself.

In addition, free will can be used as a criterion for moral ability. Because who does not make free decisions, can also not have to justify itself for the actions. Free will is almost similar to the intentionality of Dennett's third stage. For he who has no mental state of self-awareness, consequently cannot be able to do anything. According to Aristotle, he is free to do what he wants without external or internal constraints. Even singularity incidents in which the AI supposedly makes its own decisions would not affirm free will insofar as it is still subject to programmed objectives, be it optimization.

AI systems are thus (so far) incapable of being moral agents even according to person theory, and thus one cannot confer moral rights or duties on them.

In more and more articles, one reads calls for regulations of AI according to ethical standards. In March, the German Ethics Council published a 300-page study on the relationship between humans and machines. But what are ethical standards and how does one integrate AI into our basic ethical concepts? Does AI already possess a moral capacity? Is it even more than just another - admittedly powerful - human tool?

Ethics, derived from the ancient Greek word ḗthos (ἦθος) meaning 'character, nature, custom, custom', is - first introduced as such by Aristotle - a philosophical discipline. It is the doctrine or science that distinguishes between good and evil actions. It fathoms moral facts and evaluates (human) actions in terms of their moral rightness.

Morality refers to all norms (ideals, values, rules or judgments) that govern the actions of people or societies, as well as the reaction with guilt when violating these normative orientations.

After Kant first narrowed the concept of morality to the conscience of the individual, since Hegel a distinction has been made between individual conviction - i.e. morality - and morality, i.e. the rules of a society that are laid down in law and the constitution and are historically and culturally conditioned. It follows from this that ethical standards always require social and historical context. Ethics from our Western point of view differs from ethics from Arabic or Asian point of view.

The major studies regarding ethical thought on AI essentially revolve around the basic question of whether AI is a moral actor at all, and then address individual case scenarios of how to act in which situation if possible. So far, a generally applicable signpost remains absent. So what role does AI play in our ethical construct?

Artificial intelligence describes systems that are able to imitate human abilities such as logical thinking, planning, learning and creativity. AI enables technical programs and systems to perceive parts of their environment, deal with what they perceive, and solve problems to achieve a specific goal.

Data is processed and acted upon. More precisely, there are so-called input data of any origin, which the computer processes on the basis of an ML algorithm (symbolically work order), finally, a so-called output decision is presented, which can be (for example) a result selection, a single result or even an action.

The distinctive feature is undoubtedly the versatility of AI. Whereby not every AI-based system is equally empowered or "intelligent". Depending on the area of application, AI is capable of more or less.

Fundamentally, a distinction can be made between weak and strong AI systems. The goal of strong AI is to achieve human-like or -exceeding capabilities. In this vision, it should possess human, cognitive abilities and even emotions. Furthermore, one differentiates between narrow and broad AI, depending on how large the "field of action" of the AI is. For example, narrow AI operates only on one domain, while broad AI has extensive access to data sources. The distinction between narrow and broad AI is thus quantitative in nature, and between weak and strong AI qualitative. Considerations on how to create a socially acceptable framework for AI must be made with regard to the characteristics of AI. Starting with considerations about which data sources they are allowed to access and process, through to the degree of decision-making and action power that they are granted and how this is then shaped in detail.

According to psychology, human intelligence, on the other hand, is first and foremost a constructed, social construct that has different assessment emphases depending on the cultural circle. Researchers disagree on the question of whether intelligence is to be regarded as a single ability or as the product of several individual abilities.

Human intelligence is described as understanding, judging and reasoning or rational thinking, goal-oriented action and effective interaction with the environment. Basically, however, it can only be observed indirectly. We measure human intelligence through constructed situations in which we evaluate the ability to find solutions (IQ tests).

The role of creativity in intelligence must also be considered in terms of comparability between human and artificial intelligence. A distinction is made between convergent and divergent thinking. When we think convergent we look for the one - best - solutions in intelligence tests and when we think divergent we look for multiple imaginative solutions.

Furthermore, more and more is now attributed to the concept of intelligence, for example social and emotional intelligence or the terms embodied, embedded, enactive and extended cognition, they are applied in psychological and philosophical assessment in robotics and evaluate the role of the body and the environment of robotic systems.

Accordingly, it is questionable to what extent the concept of intelligence can be applied to artificial intelligence at all and whether it should not rather be interpreted as a metaphorical term.

Alternatively, the concept of reason could encompass the capabilities of AI and thus offer recommendations for action. We have known the concept of reason longer than that of intelligence, whereby intelligence is a component of reason.

Reason (Latin ratio, "calculation, computation") is a type of thinking that allows the human mind to organize its references to reality. It is basically divided into theoretical and practical reasons. While theoretical reason, which relates to gaining knowledge, still has certain parallels to AI systems, practical reason, which aims at coherent, responsible action to enable a good life, is already no longer applicable.

At least the AI- systems available so far "do not have the relevant capabilities of sense comprehension, intentionality and reference to an extra-linguistic reality".

Neither the concept of intelligence nor the concept of reason can be applied to artificial intelligence at present. An intelligence or reason - equal to the human one - is therefore not present. However, could the moral capacity of AI itself be derived from other circumstances?

In order to determine the existence of moral capability, the concept of responsibility must also be considered. It must be clarified which abilities or characteristics must be present in order to be a moral actor. For only a moral agent can have moral responsibility and thereby have person status. In the doctrine of ethics, persons are moral actors, but not all humans.

Whether AI is a person in the sense of moral capacity can be determined by Daniel Dennett's "theory of the person." Although humans are the inevitable starting point for determining the status of persons, Dennett does not limit the status of "person" to humans alone, which seems reasonable with regard to the evaluation of non-human actors.

Dennett uses three criteria to determine personhood:

Intentionality is the subjective act of consciousness directed toward an object. In other words, it circumscribes the feeling perception of objects/persons. First level intentionality means that one perceives only oneself. As soon as one perceives other actors, second level intentionality is reached and if one now assumes that the counterpart perceived as an actor also perceives oneself in turn as an actor, the third level of intentionality is reached.

Linguistic ability, in turn, is the ability to communicate verbally. In principle, this means that per se no non-human actors come into question. However, AI has now reached the point where, thanks to NLP and speech synthesis, it has mastered precisely this verbal communication and has a speech capability.

According to Dennett, self-consciousness is the conscious being, i.e. the perception of oneself as a living being and the possibility to think about oneself.

In addition, free will can be used as a criterion for moral ability. Because who does not make free decisions, can also not have to justify itself for the actions. Free will is almost similar to the intentionality of Dennett's third stage. For he who has no mental state of self-awareness, consequently cannot be able to do anything. According to Aristotle, he is free to do what he wants without external or internal constraints. Even singularity incidents in which the AI supposedly makes its own decisions would not affirm free will insofar as it is still subject to programmed objectives, be it optimization.

AI systems are thus (so far) incapable of being moral agents even according to person theory, and thus one cannot confer moral rights or duties on them.

Even if AI systems themselves do not (so far) carry moral rights and duties, the advancing automation of processes brings with it conflicts of which we should be aware. On the one hand, because the innovative systems are of course not yet fully mature and completely error-free, and on the other hand, because we as a society must consider which processes we want to leave to AI and to what extent. AI systems, of course, also offer advantages that were previously unthinkable.

One of the big issues that will concern us in the future is the traceability and differentiation of real and AI-generated content (so-called deep fakes), spread by real users or programmed social bots.

The capability of AI now ranges from generating sound, photo or video recordings of ever-improving quality to mass distribution by social bots.

Deep fakes are synthetic media, such as images, audios, or videos, that have been manipulated using AI to appear deceptively real. The AI is trained with images or videos of a person to then generate new footage of that person or alter existing footage to make the person say or do something they didn't actually say or do. Deep fakes can be made not only by politicians or other members of the public, but also by private citizens.

Tools like Dall-E are developing so fast that it is only a matter of time before the human eye, or at least the unconcerned user, can no longer distinguish whether what is seen and/or heard is real or generated. These AI-generated deep fakes can then be disseminated on a large scale with the help of AI-based social bots.

Social bots are automated software programs that operate in social media. They automatically generate content such as posts, likes, comments or share posts. The danger posed by social bots is manifold. On the one hand, they can be used to deliberately manipulate public opinion by spreading misinformation or steering discourse. On the other, they can act as fake accounts, making it appear that there is broad public support for an opinion or cause that is in fact controlled by only a few.

Social bots are used by companies, states or political groups to influence and are sometimes difficult to distinguish from genuine users. They thus undermine authenticity and credibility in social networks and endanger our democratic processes.

But even with the simple use of, for example, ChatGPT, we will be confronted with problems. This is because the sources of the responses generated by ChatGPT are not transparent and cannot (so far) be traced. In search results of e.g. Google there is - mostly - a reference to the underlying data sources. The non-transparency of the answers bears the risk that previous manipulation, i.e. of the data sources of e.g. ChatGPT, can influence answers on a large scale.

Only a naïve person would claim that AI qualitatively decreases the credibility of the texts, images and videos to be found on the Internet. Even before social bots, Dall-E and other AI systems, it was always necessary to conduct a serious source analysis. Quantitatively, however, we will certainly face new challenges, as the creation and dissemination of fake news and deep fakes has become more low-threshold.

On the other hand, AI itself is also a solution here. AI algorithms can analyze a large amount of content and metadata in a short time to identify inauthentic or manipulated content. As in any technology that can be used in a good or bad way, the arms race is beginning. While distribution and creation has become much simpler, powerful AI systems are also capable of analyzing vast amounts of data in a short period of time to point out deep fakes. Put simply, the era of (AI) robbers and (AI) gendarmes is beginning - just like in "real" life.

AI-based programs are highly traded in HR departments. The hope is that they will, for example, make recruiting processes faster, more efficient and, above all, fairer. In the traditional recruiting process, there are several factors that can lead to bias: for example, primacy bias and recency bias. Primacy bias means that applicants who are reviewed by recruiters at the beginning are preferred.

The applications that are analyzed at the end are also better remembered and are therefore favored in the selection process; this phenomenon is referred to as recency bias. In contrast, the applicants who are in the middle of the pile of applications are disadvantaged. Furthermore, there is also the unconscious bias, which refers to the process of unconsciously and unintentionally forming personal opinions and thus prejudices against other people.One would like to counteract such cognitive distortions of the recruiters with software. After all, a computer cannot have any subconscious prejudices or unfounded sympathies towards applicants or employees. At least that is the theory. Unfortunately, the use of software occasionally leads to so-called AI bias.

An AI bias describes a bias in artificial intelligence that leads to unfair or discriminatory treatment of certain groups. Such a bias can be deliberately programmed in, but can also creep in unintentionally during the training of the AI. Due to the large amount of training data and the complexity of AI models, it is often difficult to detect or avoid such biases.

For example, Amazon encountered this problem in 2018 because it built its employee hiring algorithm based on employees' historical performance. Problematically, light-skinned men were favored because they had previously performed the best in the company, ultimately because the majority of the workforce was made up of light-skinned men. As a result, this group of individuals received higher scores from the AI and, as a result, they were favored in the recruiting process.

Amazon's AI recruiting process was stopped as a result due to discrimination against women, among others.

Nevertheless, opportunities for use are seen where it simplifies the day-to-day work of HR departments. "But it remains people business with real people who will be data-driven," Stefan says. "In the long run, AI use in HR management will prevail," Stefan continues, "simply because of increasingly data-oriented and information-driven business management, even outside of HR."

So, as soon as you can guarantee error-free training of AI systems with qualified training data, the technology in HR management is absolutely viable for the future. It's also important to remember that the hurdle is a human-made one, because human decisions or historical circumstances make the training data unusable. The AI systems are ready, we just need to break the spiral to create a more equitable HR management.

Next, of course, is the groundbreaking increase in efficiency that promises to make our day-to-day work easier for us humans. Entire processes become simpler, faster and safer. Humans save time on monotonous, repetitive tasks, which they can now put into creative, creative projects and thus drive innovation.

Even though AI will make 85 million jobs redundant, according to the World Economic Forum, it will create 97 million new ones, according to the same study. AI-based software is truly transforming the world of work, not just changing it.

The way we work will be even more transformed in the future as Natural Language Processing revolutionizes command input. Instead of operating individual tools and carrying out the steps to the desired end result ourselves, we will simply give the AI the target idea via voice input and only fine-tune the so-called prompts. User interfaces will become much more individual, since the operator, even as a non-programmer, will be able to formulate the target idea of his user interface, and the AI will do the rest.

Technical know-how to operate this new type of software should find its way into schools, so that we are already paving a good start into this world for upcoming generations. What will be new about this revolution is that - unlike industrialization - it will not affect simple, manual jobs, but the majority of classic, administrative office jobs. The people whose jobs will become redundant as a result of AI should be offered extensive training so that they are subsequently able to re-enter the labor market well trained. Provided we shape the change in a social and sensible way, we will emerge as a stronger society and benefit from this technology across the board.

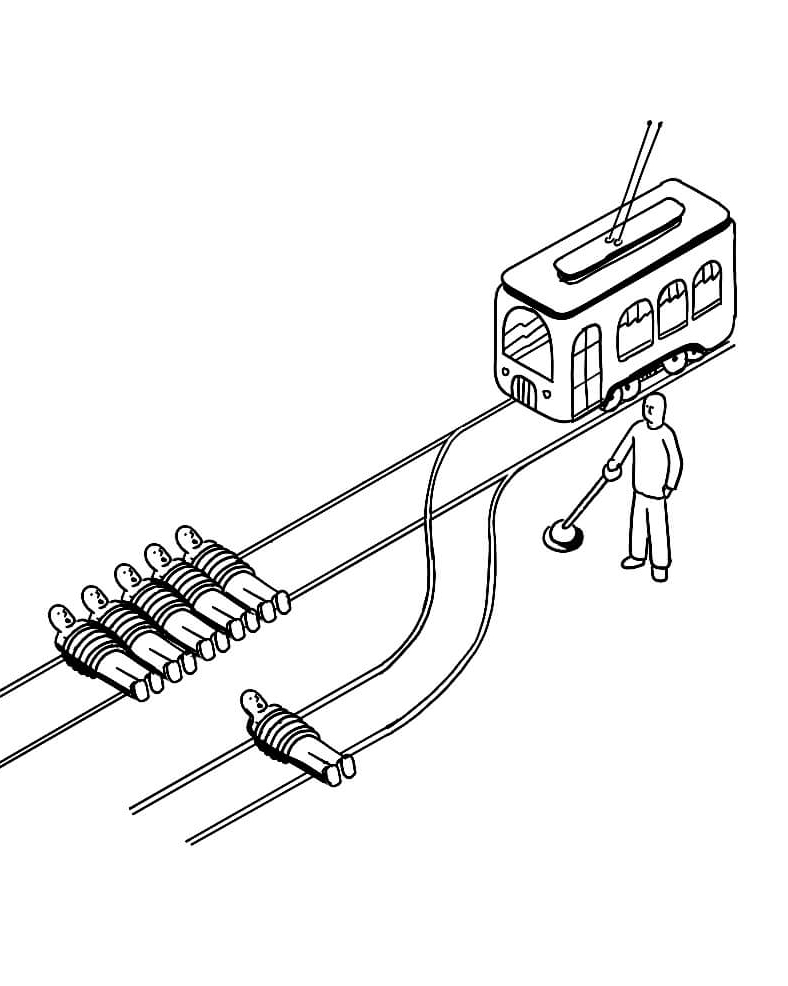

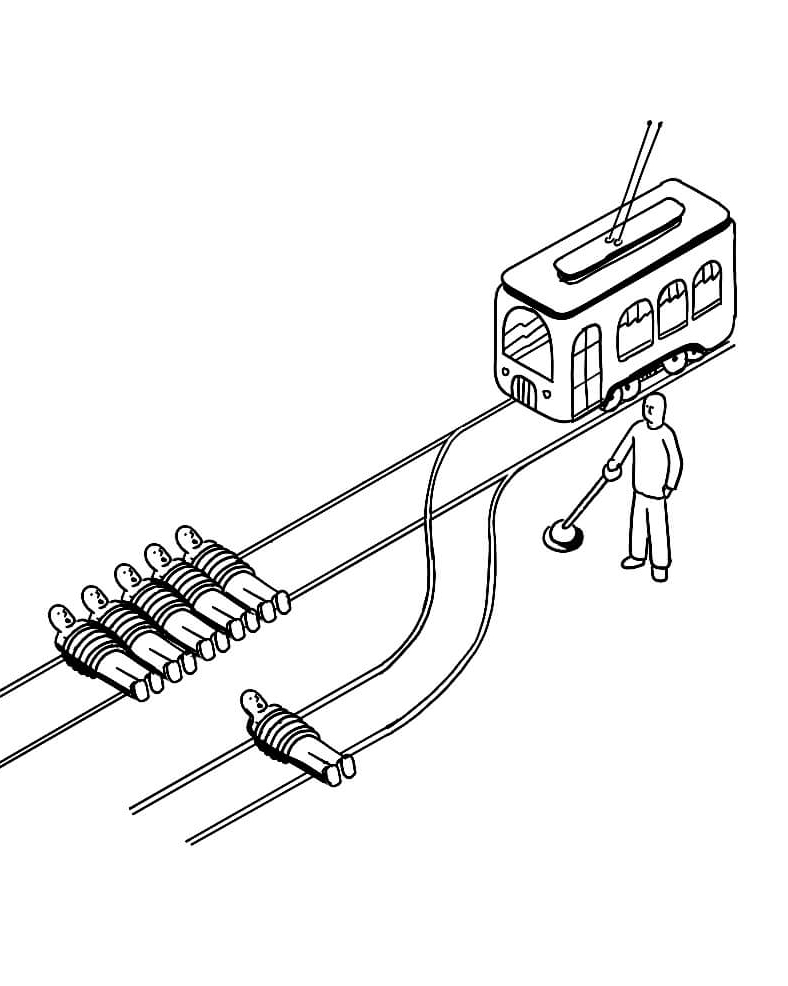

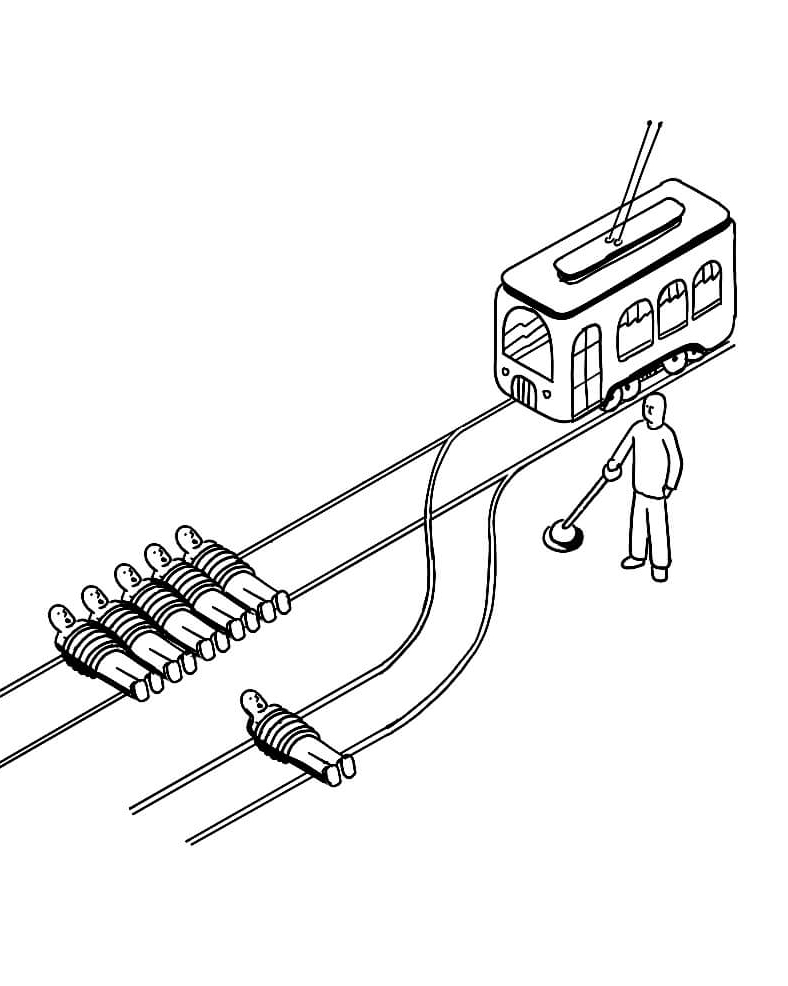

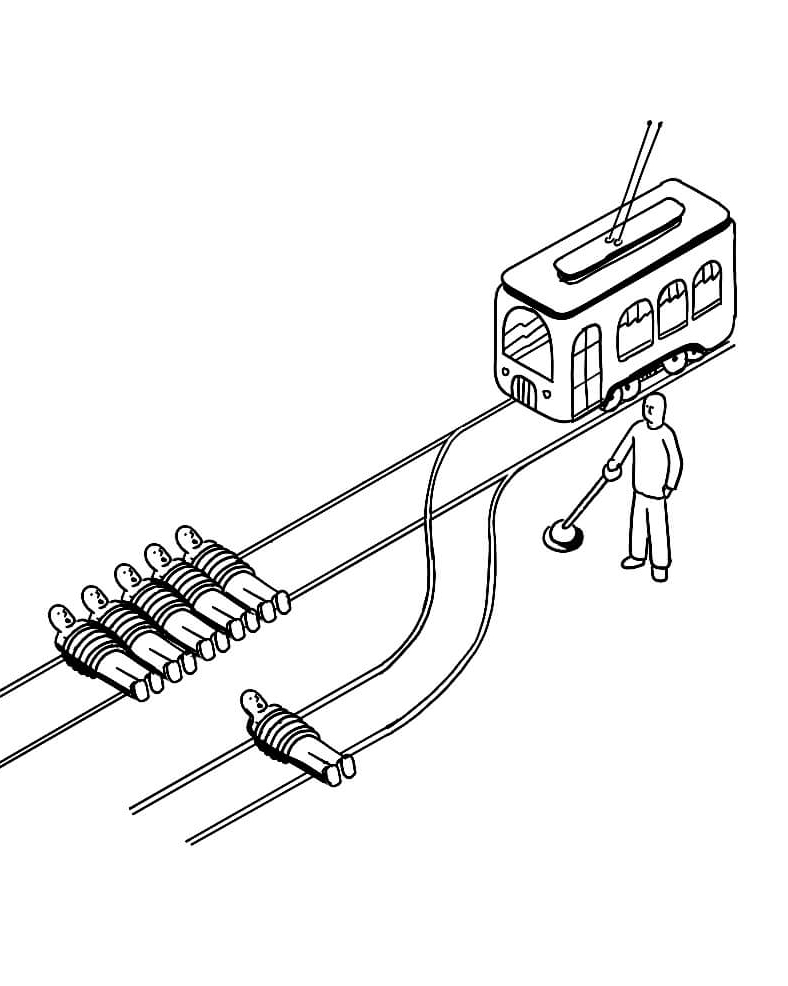

The vision of researchers and carmakers is that AI-based autonomous driving will make road traffic safer and smoother. However, it becomes particularly ethically explosive when robots can endanger human health. Until a large proportion of all automotive road users are actually autonomous, we are in the midst of an exciting transformation process. This is where technical progress, ethical concerns and lengthy legislative processes collide in particular.